What will next generation AI processor be like?

Next generation Software is not algorithmic. But is built on mathematics that mimics nature. How will this software 2.0 processor operate?

Information technology, is moving away, from its current computation and record keeping roles, to artificial systems. This shift in application demand, cannot be served by algorithms and needs new processor operating paradigm. The present concept of single program counter as the master conductor executing programs will not suffice for this new demands placed on it. It should be based on how nature operates. I.e like chemistry.

Nature, does not have a master scheduler. Everything in nature, like a simple ant, is a process that we observe. Ants interact with each other to form colonies. Some interactions in ant colony are visible and some hidden. Like the ant colony, artificial systems, is a process of many components with observable and hidden actions. Processes, proceed when its activities coordinate, hence by their very nature is a network. The applications of this nature has network topology i.e Network applications .

This Software 2.0 paradigm follows this property seen in nature. i.e it exhibits processes as a network of smaller independently network of autonomously operating agents. This next generation processor should model processes as a network of autonomously operating agents.

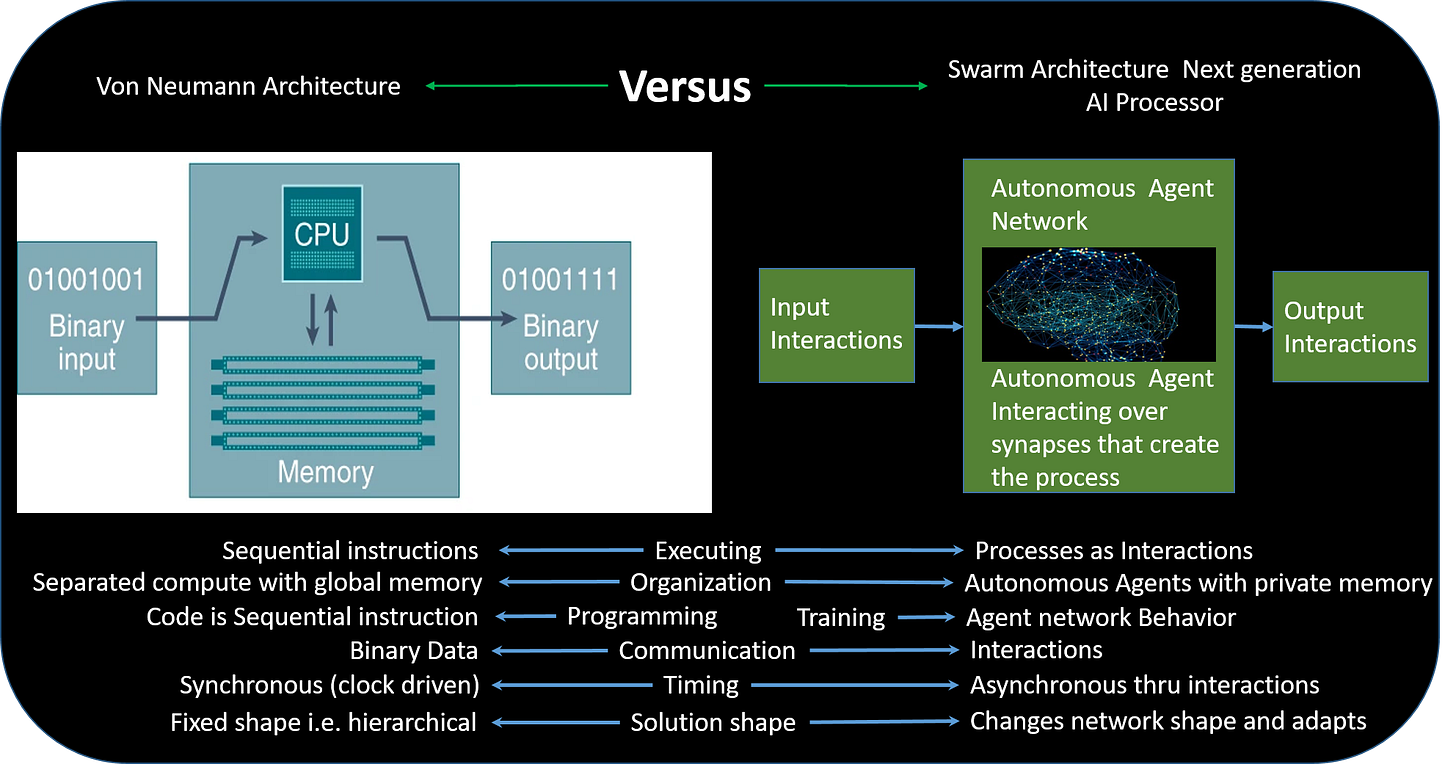

This picture above gives how drastically different next generation processor would be. Following are the differences in a bit detail between the two processors types.

Execution: The biggest change is that, in the AI processor, there is no central processor but systems are a network of autonomous agents that run independently talking to each other. When these agents interact with each other, process state changes, which can be observed as either input or output interactions.

Compute/Memory Organization: Unlike the present day processors, there is no common memory or CPU. Each autonomous agent has its own control and memory. This makes systems built on this processor very secure and safe. Also, such systems are decoupled, and hence can evolve without breaking the whole. aka self healing property found in nature.

Programming Vs Training: Software 1.0 languages manipulate data in memory. The program defines step by step how the data is manipulated. But Software 2.0 language, there is no programming. Rather language trains how the different agents in the network interact with each other.

Communication: Outside systems feed data into current processor by sending data as binary input. But in the next generation processor, outside systems give input to the process by interacting with input interactions and sense the process by interacting with outbound interactions. Such systems, are by nature secure, as only visible interactions can be interacted with.

Timing: Where as in Von Neumann processor, the programs advance by a clock. The next generation AI, i.e. non-Von Neumann processor advance when the different agents in the network interact with each other. There is no master clock that keeps track of progress.

In conclusion, next generation AI processor, We can finally create things that are artificial.

References

neuromorphic computing comparison

Opportunities for neuromorphic computing algorithms and applications